I’ve been working recently on a data pipeline composed of independent modules written in Python and Rust that download, transform, quality-check, and aggregate a bunch of data into tables that are easy to analyze downstream. As the pipeline grows, it’s becoming unwieldy to run each module one by one. I have to consult my diagram to remember which module to run first, which one next, how to verify the results, and so on. It’s time to automate the process.

Airflow is a powerful, open-source data orchestration tool used widely across industries. It undergoes ongoing development by a robust community that is continually adding more features than I know what to do with. While Airflow can schedule and monitor large workloads distributed across large clusters of machines, my pipeline doesn’t need nearly that much scale; it can still run on a single machine. Clearly, Airflow can easily handle my workload. But there was one feature of my small-but-kinda-complicated pipeline that needed some tweaking to work with Airflow.

The problem: how to monitor modules internally

When I run my pipeline with Airflow, Airflow’s handy GUI lets me see which modules have succeeded, which have failed, and which are still running. But my modules have their own handy monitoring mechanism: print statements that tell me what the module is doing and how far along it is. Yes, maybe print statements are simple and old-fashioned, but they are quite handy. When I’m running a module individually, it’s convenient to glance at the terminal and see how far along it is. I didn’t want to give up this feature, nor did I want to rewrite a bunch of my code.

Do Airflow logs solve the problem?

Fortunately, Airflow logs the print statements. One can look in the Airflow logs directory (at ~/airflow/logs by default) for a specific task of a specific DAG run and find parts of the log that look something like this:

[2023-09-01T18:35:16.006-0400] {subprocess.py:86} INFO - Output:

[2023-09-01T18:35:16.034-0400] {subprocess.py:93} INFO -

[2023-09-01T18:35:16.035-0400] {subprocess.py:93} INFO - MEOW

[2023-09-01T18:35:16.035-0400] {subprocess.py:93} INFO - /tmp/airflowtmph5ymaof2

[2023-09-01T18:35:16.052-0400] {subprocess.py:97} INFO - Command exited with return code 0

[2023-09-01T18:35:16.064-0400] {taskinstance.py:1400} INFO - Marking task as SUCCESS. dag_id=tritask_meow, task_id=task01, execution_date=20230901T223512, start_date=20230901T223515, end_date=20230901T223516

[2023-09-01T18:35:16.100-0400] {local_task_job_runner.py:228} INFO - Task exited with return code 0

[2023-09-01T18:35:16.116-0400] {taskinstance.py:2784} INFO - 1 downstream tasks scheduled from follow-on schedule check

In this case, the task’s module printed a newline, the word “MEOW” (yes, I like cats), and the path of the temporary directory that Airflow was using (/tmp/airflowtmph5ymaof2).

The logs are useful for seeing the print statements after the run is complete, but I want to see the prints immediately without having to manually rummage around in some logs. I could write a new tool that would read from the logs in real time and immediately copy the output to somewhere. But then I’d have to parse through the log, which might be messy and brittle, and my log reader might collide with Airflow’s log writer when they both try to access the same log file simultaneously. Surely there’s a cleaner, more robust way.

A better solution

The key is to redirect the standard out, which normally prints to the terminal, to someplace where I can see it when the module is being run by Airflow. Maybe if I could get a window to pop up…

…And it turns out that there’s a very convenient solution. The library tkinter lets Python interface with GUI tools, and it’s already included in the Python standard library, so I don’t have to reconfigure my virtual environments.

The details

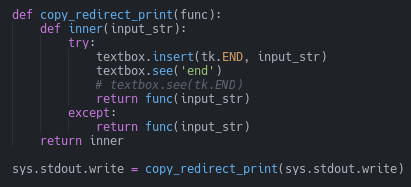

I found a very nice solution that wraps the standard out, so that the prints are directed to both the pop-up window and the terminal, if it is available. The latter is useful because, if I’m running a module independently without Airflow, I still have the print output in the terminal; I don’t have to give that up. The core of my adapted solution is below.

The last line in the screenshot above shows where we wrap the standard out.

You might also notice the see operation in the screenshot above. As the pop-up window fills with print statements, the new prints eventually run past the bottom of the box. The see… end operation scrolls the view of the window down, so that we can always see the most recent print.

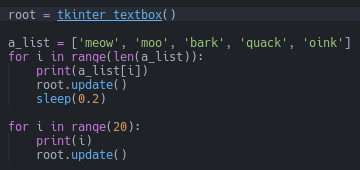

Another issue arises when printing output from loops. Ordinarily, the output won’t appear in our window until after the loop completes. However, I have some long-running loops in some of my modules; waiting for the loops to finish defeats the purpose of having real-time updates on the module’s progress. The solution is to explicitly update the window, as below.

Notice that there’s an update operation in each of the for loops.

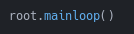

Finally, a lot of tkinter examples online have a mainloop operation that maintains the window or other GUI widgets.

Since I want my pop-up window to close at the end of the module, so that Airflow continues to the next module, I omit this mainloop operation in my modules.

The code

The code can be found in this repository.